TL;DR: Large context windows are powerful, but not enough. If you want AI that’s fast, accurate, and controllable—RAG still matters. Here’s why.

A Quiet Revolution in AI

AI models aren’t just getting smarter—they’re getting way better at remembering.

One of the biggest breakthroughs in 2024 wasn’t raw intelligence. It was memory.

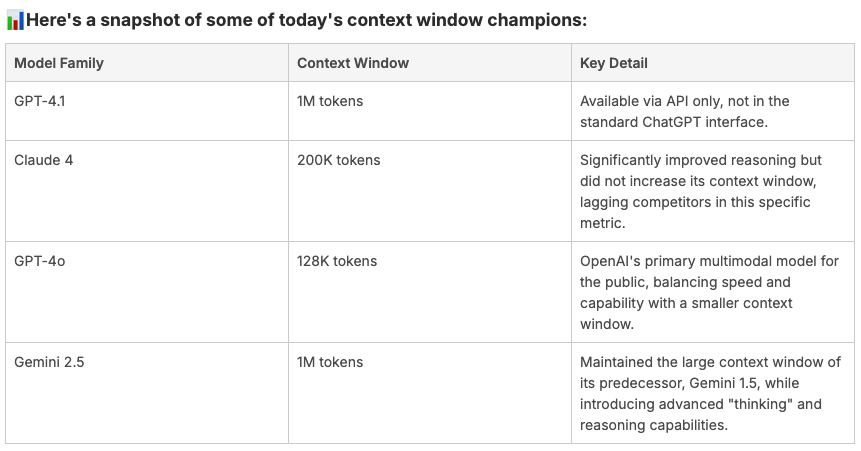

Thanks to massive context windows in models like Claude 4, GPT-4o, and Gemini 2.5, AI can now process the equivalent of an entire book, podcast library, or multi-month email thread in a single interaction.

That’s a big deal.

It means AI can now do things like:

- Review your whole customer journey before replying

- Reference all your past content while helping write new posts

- Understand your business deeply—not just surface-level answers

For creators, founders, and teams using AI to scale…

these huge memory upgrades open up an entirely new playing field.

But here’s the catch:

More memory doesn’t always mean more control.

And that’s exactly where RAG still plays a critical role.

Let’s break it down.

How Context Windows Got Massive—And Why It Matters

Think of a context window like short‑term memory for an AI model—it’s the amount of information the model can “see” and reason over in a single turn.

- Old context windows were tiny—just a few pages.

- Now, models like Claude, Gemini, GPT‑4o, and GPT‑4.1 handle volumes like books, entire websites, or multi-month chat logs.

📌 Approximate token-to-page mapping:

- 200K tokens ≈ 500–700 pages

- 128K tokens ≈ 250–400 pages

- 1M tokens ≈ several full-length books

This lets models reason over everything from multi-page support docs to entire codebases or knowledge bases—all at once.

But just because you can load it all in… doesn’t mean you should.

(That’s where RAG still shines.)

Game-Changing Use Cases for Creators & Businesses

Bigger context windows aren’t just a technical flex.

They unlock use cases that were previously out of reach—especially when you want the AI to “just know” a lot without setting up RAG or custom workflows.

Here’s how different teams can actually use them:

For Creators & Experts

- Upload your book, course, or podcast transcripts

- Ask AI to reference everything you’ve ever said to help draft new content

- Build assistants that sound like you—not a generic AI voice

📌 Example: A coach uploads 12 months of newsletters and gets personalized video scripts, tweets, and responses written in their exact tone.

For Agencies & Freelancers

- Drop in client brand guides, tone rules, past projects, and call notes

- Let AI generate assets that are always on-brand and fully informed

- Save hours digging through folders or Notion docs

📌 Example: A freelance copywriter uses Claude 4 to draft 5 email sequences that match 3 different client voices—without toggling between briefs.

For SaaS & Startups

- Feed in your full help center, product docs, onboarding flows, and sales decks

- Let AI reference everything when summarizing a product, replying to support tickets, or generating demos

- No need to manually fetch links or explain repeat details each time

📌 Example: A startup founder uses GPT-4.1 to generate sales answers that combine product benefits, pricing, and key objections—all based on their latest sales deck and team notes.

📌 Example: A growth marketer drops in 6 months of user feedback and support transcripts, then asks Gemini 2.5 to summarize the top friction points by feature.

💡 Bonus: Want to build your own agent that “just knows” your brand?

Tools like Custom GPTs (OpenAI) and Gemini Apps (Google) make it easy to create agents tailored to your business—trained on your tone, documents, and workflows.

And with context windows now hitting 128K to 1M tokens, these agents can finally stay fully informed without complex setup.

But... Large Context Windows Have Limits

Yes, giant context windows are powerful—but they’re not magic.

Here’s where they fall short:

- They’re expensive: Stuffing 100K+ tokens into a prompt can cost 10–20x more than a smart RAG setup—especially at scale.

- They’re slow: The more you feed in, the slower the response. Large inputs increase latency, which is a dealbreaker when users expect instant answers.

- You lose control: You can’t tell the model what to prioritize. It decides what matters—and might ignore the document you actually wanted it to use.

- No source transparency: There’s no native way to show where an answer came from. You can’t trace a claim back to a URL, doc, or product FAQ—unless you build custom tooling.

- Limited logic and workflows: You can’t chain steps, route responses, or qualify leads. Context alone doesn’t give you behavior—it just gives you more memory.

And yes—these limits still apply in ChatGPT Plus, Gemini Pro and Claude Pro. You’re not billed per token, but the underlying constraints (latency, cost to the provider, lack of control) are still there.

✅ So while larger context windows are a huge leap forward…

They don’t replace real retrieval, workflow logic, or source-grounded answers.

That’s where RAG shines.

This Is Why RAG Still Wins

RAG = retrieval-augmented generation

Instead of cramming every doc, deck, or support page into a massive prompt, RAG lets the AI pull only what’s relevant—right when it’s needed.

It’s like giving your AI a smart research assistant that knows exactly where to look.

Here’s why it works better:

- More efficient: You only process what’s needed. No wasted tokens on 50-page PDFs or irrelevant onboarding slides.

- Faster answers: Smaller context = lower latency = faster user experience.

- More control: You decide what gets indexed, how it's chunked, what’s prioritized, and what gets surfaced.

- Source transparency: Every answer can link directly back to its original source—crucial for internal ops, sales teams, and compliance-heavy workflows.

- Logic, routing, and qualification: Unlike static context windows, RAG allows you to add decision layers. Route users, qualify leads, trigger internal processes, or personalize flows—before the AI even responds.

Perfect for internal and customer-facing AI agents:

Internal Use Cases

- An HR or ops agent that answers employee questions by pulling from policies, benefits docs, and Slack threads

- A sales enablement agent that helps reps find the right pitch, pricing FAQ, or battlecard on the fly—during calls or CRM use

- An internal product support bot that references engineering docs, changelogs, and bug trackers to answer questions faster than scrolling through Notion

Customer-Facing Use Cases

- AI agents embedded on your site or app that surface accurate product info instantly

- 24/7 support bots that actually answer questions—not just redirect to articles

- Lead gen flows that explain pricing, qualify leads, and guide them to book a call

- TOFU and demo flows where every second and every answer matters

Hybrid Is the Future

The smartest AI setups don’t choose between long context or RAG—they use both.

Here’s how it works:

- Large context windows: Handle recent chat history, user behavior, or back-and-forth threads—so the AI feels present and coherent.

- RAG: Fetches the right info from your docs, knowledge base, or CRM—so responses stay factual, helpful, and on-brand.

The result?

- Speed (no bloated prompts)

- Memory (conversational continuity)

- Accuracy (backed by real data)

It’s the best of both worlds—and it’s how Surfn AI is built.

Want to Build an AI Agent That Actually Works?

Most AI just talks.

Surfn is designed to convert.

With Surfn, you can create a customer or audience-facing, RAG-powered AI agent that supports your business across key touchpoints:

- Branded, interactive chat experiences that feel human

- Lead capture and qualification that adapts to each visitor

- Conversion-optimized flows powered by your own data

- 24/7 support that’s fast, accurate, and grounded in real sources

- Instant meeting booking via Calendly or your preferred tool

- On-brand messaging that speaks in your voice—not a robotic script

Surfn helps you optimize every touchpoint to boost engagement, conversion, and growth.

It's not just AI. It's your brand, amplified.

👉 Check out our guide: How to Create a RAG AI Agent

👉 Or sign up to build your own agent (no code required)

Smarter conversations. Better outcomes. Built for real businesses.

Update (Sep 8, 2025): 🚀 Surfn is now live! Create your free AI agent today → and start turning traffic into conversions—on autopilot.

Share this article:

Twitter | LinkedIn | Facebook

Story by Rupali Renjen

Rupali Renjen is the co-founder of Surfn AI, empowering creators and brands with AI agents that boost conversions, automate workflows, and drive business growth.

🚀 Learn more at surfn.ai | Connect on Twitter | LinkedIn | rupalirenjen.com